Untangling the Web: Fixing Sexism on Wikipedia

Presentation: Prezi – Gender Bias on Wikipedia

Introduction

While women’s rights have come a long way, there are still glaring discrepancies in gender equality in society. As we move more and more of our lives online, those inequalities are dragged along. Nowhere are those inequalities more apparent than in how and what types of information are available on the internet; chiefly, this is a major issue in regards to female editorial representation on Wikipedia, the most accessed information website on the internet and increasingly accepted as a reliable and empirical data source.

It is inarguable that gender inequality is still a huge problem world-wide in many aspects of life. In the workplace, a recent Pew report shows that almost 20 percent of women report having experienced gender discrimination on the job, and half of those say it had a negative impact on their career (most of those responding saying it had a “big” impact). [1] “The gender wage gap has hovered at about 77 cents on the dollar since 2007,” and the discrepancy increases when other marginalized characteristics are taken into account, such as race, i.e. Latinas make 58 cents for every dollar a Latino earns, making the gap between what she earns to a non-Hispanic white male vast.[2] World wide, only 21 percent of women hold senior management positions and only 19 percent hold board roles.[3] In an additional example, the disparity between men and women in academia is still vast. Predominantly male representation in the sciences as tenured, published and established professors is still a reality. “Although more women are getting PhDs in life sciences than in previous years — 52 percent in 2011, compared to 13 percent in 1970 — a drain exists after graduation, when women tend to eschew research careers in academia for other prospects.” And even for the women who do go into academic careers, the unequal treatment extends far beyond making, across the board, smaller salaries: they have more administrative paperwork than male colleagues, more professional tasks, and are published less despite longer working hours.[4]

While progress is being made in many areas, across the tech industry, which has always been predominantly male, the representation of women in influential roles is actually decreasing. In 2012: even though women held 57 percent of all occupations in the workforce, in computing jobs they garner less than half that, at 25 percent; only 20 percent of CIO’s (Chief Information Officer) in Fortune 250 companies were women. In addition, 37 percent of all computer science graduates were women in 1985, but by 2010 that number was down to 18 percent (14 percent at major universities). [5]

Social Challenge

By taking in this last set of numbers, it is not hard to see at least some of why the gender inequalities of our daily life are reflected in the lives we live online. There is no place where these sorts of biases have had a greater effect than in the environment of Wikipedia; not only is that where a great number of direct users get a lot of their reference information, it is where an increasing number of other websites get theirs. It is the 6th most visited website in the world.[6] The information on the site, as well as it’s organization, categorization, and the level of importance it is accorded, is becoming synonymous with what is “true,” not just on Wikipedia, but across the internet, and, therefore, in the minds of many internet users.

… every month 10 billion pages are viewed on the English version of Wikipedia alone. When a major news event takes place, such as the Boston Marathon bombings, complex, widely sourced entries spring up within hours and evolve by the minute. Because there is no other free information source like it, many online services rely on Wikipedia. Look something up on Google or ask Siri a question on your iPhone, and you’ll often get back tidbits of information pulled from the encyclopedia and delivered as straight-up facts.[7]

The first organization to point out that there is an extensive, systemic bias in terms of its editors is Wikimedia itself, the organization that runs Wikipedia and whose stated goal is to “compile the sum of all human knowledge,” a task which seems impossible when not all humans are represented by those doing the compiling. While gender inequality is by far not the only one that Wikipedia needs to address, it is one of the most glaring. 91 percent of “Wikipedians” are male, and only 9 percent female.[8] (They are also, statistically speaking, white, more upper class than not, educated, tech literate, geographically West and North, English speaking, single, childless, and in possession of ample luxury time.[9] While these latter descriptors of a typical Wikipedian are not the subject of this proposal, some of them could potentially be addressed by the same solutions that we are proposing). Part of the complication is that they have not been able to retain all of the active editors they once had, peaking at 51,000 editors in 2007, they are down to 31,000 as of summer, 2013, and male editors are far more likely to contribute multiple edits over longer periods of time. This necessarily creates a mirrored bias in the information that is available on the site; “Among the significant problems that aren’t getting resolved is the site’s skewed coverage: its entries on Pokemon and female porn stars are comprehensive, but its pages on female novelists or places in sub-Saharan Africa are sketchy.”[10] To expand on one of these points, and to clarify that it is not only what is covered, but how it is categorized that creates sexist slant, Amanda Fillipacchi’s article, “Sexism on Wikipedia Is Not the Work of a ‘Single Misguided Editor’”, in The Atlantic is a good working case.

American female novelists were being dragged out of the main category “American Novelists” and plopped into the subcategory “American Women Novelists.” I looked back in the “history” of these women’s pages, and saw they used to appear in the main category “American Novelists,” but they had recently been switched to the smaller female subcategory. Male novelists were allowed to remain in the main category, no matter how obscure they were.[11]

She goes on to say that this became a major problem, for in the list of authors in the more popular, accessible ‘main’ category, not only are there many more men than women, but in fact, all the men are listed there, while many female authors, some quite major ones, are shunted into a subcategory that may never be found unless it is searched for specifically and meticulously.

Since it’s inception in 2001, much of Wikipedia’s content was taken with a grain of salt by many experts, academics and professionals, but increasingly, this trend is shifting. When doing a search for references in published academic journals in 2008: “citations of Wikipedia have been increasing steadily: from 1 in 2002 … to 17 in 2005 to 56 in 2007. So far Wikipedia has been cited 52 times in 2008, and it’s only August…Along with the increasing number of citations, another indicator that Wikipedia may be gaining respectability is its citation by well-known scholars.”[12] As this website becomes accepted as an empirical authority, and it’s statements and research posited as fact, it becomes more and more imperative to address the issues of editorial inequality.

Solution

Wikipedia, while acknowledging they have a widespread and systemic problem in regards to attracting and preserving a more varied pool of editors, is making efforts to address the issues. First, they are making changes to the editing interface, one that is easier to use for the less tech savvy than is the usual Wikipedian. Also:

In 2012 Gardner formed two teams—now called Growth and Core Features—to try to reverse the decline by making changes to Wikipedia’s website. One idea from the researchers, software engineers, and designers in these groups was the “Thank” button, Wikipedia’s answer to Facebook’s ubiquitous “Like.” Since May, editors have been able to click the Thank button to quickly acknowledge good contributions by others. It’s the first time they have been given a tool designed solely to deliver positive feedback for individual edits, says Steven Walling, product manager on the Growth team. “There have always been one-button-push tools to react to negative edits,” he says. “But there’s never been a way to just be, like, ‘Well, that was pretty good, thanks.’” Walling’s group has focused much of its work on making life easier for new editors. One idea being tested offers newcomers suggestions about what to work on, steering them toward easy tasks such as copyediting articles that need it. The hope is this will give people time to gain confidence before they break a rule and experience the tough side of Wikipedia.

These might seem like small changes, but it is all but impossible for the foundation to get the community to support bigger adjustments. Nothing exemplifies this better than the effort to introduce the text editing approach that most people are familiar with: the one found in everyday word processing programs.[13]

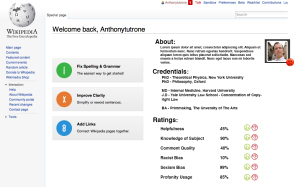

In addition to these changes, we propose to work with Wikimedia to create a new and innovative user system that completely removes anonymity of the Wikipedia community. Each user would be required to use their real name and photograph upon creating a new user profile. The image below provides a mockup of the imagined user page and rating system:

Attached to each user profile is a ratings system containing various categories that other users of Wikipedia can use to rate the behavior of other users. Categories can include:

- Helpfulness – how much does the user offer to the particular subject matter

- Knowledge of Subject – Is the user qualified to write and edit pages of a particular subject?

- Comment Quality – Are the comments made by the user during the discussion/revision process helpful to the process as a whole?

- Racist Bias – Does the user show a particular racist bias in his edits discussions?

- Sexism Bias – Does the user show a particular bias towards gender in his edits and discussions?

- Additional categories can also be recommended by the community

Each category is rated from 1 to 100. The user system averages and determines whether or not the user is providing meaningful contributions to the community. If the user has a score of 100%, the user is treated an upstanding member. If the user has a low rating, then considerable limitations are placed on the users ability to contribute.

Users would be limited from doing the following:

- Creating new entries on Wikipedia

- Deleting content from already created entries

- Modifying content from entries

- Participating in discussions

If enough infractions occur, then the user would be locked out from the system for a limited time. After that time has elapsed, they will be able to participate again. Each user account will have history of their ratings that they are unable to remove.

If enough bans on a particular account occur, they will be removed from the community entirely and unable to rejoin.

Social Impact

With this new system working along with the current changes Wikipedia is already in the process of implementing, we hope to decrease the bias and address some of the systemic issues with the system. If there is success here, it could spill over onto other community sites that have similar problems, such as Reddit.

The gender biases of the virtual world came from our real world inequalities, the latter providing the structure of the former. We have seen in the past couple of decades, though, that the process also works in the reverse, that what becomes a social norm online is often adopted in larger society. If we succeed here, the potential social impact reaches beyond the confines of the internet, but into the fabric of our real world communities.

Continuity

Any changes that are made to the Wikipedia editor interface that encourage greater inclusion as well as a system for useful feedback will set a new standard that once integrated into the system, will become a permanent and evolving addition Wikipedia; since it is a website with tremendous influence, people will come to expect such standards in similar social networking sites.

Leadership

Even though anonymity has been one of the tenets of the internet since the beginning, it cannot be denied that there are some ways in which this has been damaging to certain forums that so many of us use. The evidence of that can be found in the increasing number of websites requiring real name profiles to take advantage of its services. We do not think that all anonymity must be done away with, but in some areas of social networking and information, it does more harm than good.

The vision we have for change is not to destroy the potential and freedom of the internet, but to find a community solution that could address a cultural problem, increasing access and usability for everyone.

Morgan Conroy received her BA in Religious Studies with a concentration in Sociology from Hollins University in 2012. Currently she is a candidate for her Master’s Degree in Media Studies from The New School for Public Engagement. She is passionate about sociology, feels that the best way to combat the problematic elements in our society is to be conscious of them when creating media.

Anthony Tutrone has been passionate about technology and the Internet since he was first logged in to America Online. During his undergraduate career, he studied film, television, and multimedia production. Through his strong understanding of multimedia, planning, design, and development, Anthony wants to use his technical and production skills to help make the Internet a better place. He is current a Masters student at The New School.

Charlotte Wheeler attended the New College of California and received her BA in Sociology with a Focus in Activism and Social Change. Her interests lie in using new and mixed media to illuminate urgent social problems and create new forums for groups in conflict.

[1] http://thinkprogress.org/economy/2013/12/11/3048811/pew-gender-wage-gap/ (Accessed February 6, 2014)

[2] http://www.huffingtonpost.com/2013/09/17/gender-wage-gap_n_3941180.html(Accessed February 6, 2014)

[3] http://www.grantthornton.ie/db/Attachments/IBR2013_WiB_report_final.pdf(accessed February 6, 2014)

[4] http://www.bustle.com/articles/12024-new-study-presents-easy-fix-for-more-women-in-science-why-its-not-a-solution(accessed February 6, 2014)

[5] http://www.theatlantic.com/technology/archive/2013/10/we-need-more-women-in-tech-the-data-prove-it/280964/ (Accessed February 6, 2014)

[6] http://www.alexa.com/topsites (Accessed February 6, 2014)

[7] http://www.technologyreview.com/featuredstory/520446/the-decline-of-wikipedia/ (Accessed February 6, 2014)

[8] http://meta.wikimedia.org/wiki/Editor_Survey_2011/Women_Editors (Accessed February 6, 2014)

[9] http://en.wikipedia.org/wiki/Wikipedia:Systemic_bias (Accessed February 6, 2014)

[10] http://www.technologyreview.com/featuredstory/520446/the-decline-of-wikipedia/ (Accessed February 6, 2014)

[11] http://www.theatlantic.com/sexes/archive/2013/04/sexism-on-wikipedia-is-not-the-work-of-a-single-misguided-editor/275405/ (Accessed February 6, 2014)

[12] http://digitalscholarship.wordpress.com/2008/09/01/is-wikipedia-becoming-a-respectable-academic-source/ (Accessed February 6, 2014)

[13] http://www.technologyreview.com/featuredstory/520446/the-decline-of-wikipedia/ (Accessed February 6, 2014)

Your comment is awaiting moderation.

I thoroughly enjoyed your group’s presentation (pictures of the Elvis impersonator and puppy included) on combatting sexism on Wikipedia and the web. I agree that removing anonymity and placing a checks and balances, rating system on a site like Wikipedia shows great potential for changing the attitudes of its users. However, as you might be well aware, the hostility online sometimes translates to real life situations where people’s privacy and quality of living are disrupted.

Is there anywhere in this system that might potentially bridge the gap of harassment prevention offline, stemmed from this lack of anonymity on the web? Would the system also include things like tracking affiliates/associates of the person who has committed these infractions?

For example: if you have someone who was suspended for the infractions but this user somehow rallied enough like-minded individuals to go after their target.

Also, how would the system truly authenticate that the person is who they actually claim to be?

Anyway, this sounds like a truly exciting project and I can’t wait to see an example version of it in class!

-Jade